Member-only story

Steal My Blueprint to Build and Deploy RAGs [In Minutes].

Most RAGs are built on this stack; why would you redo it every time?

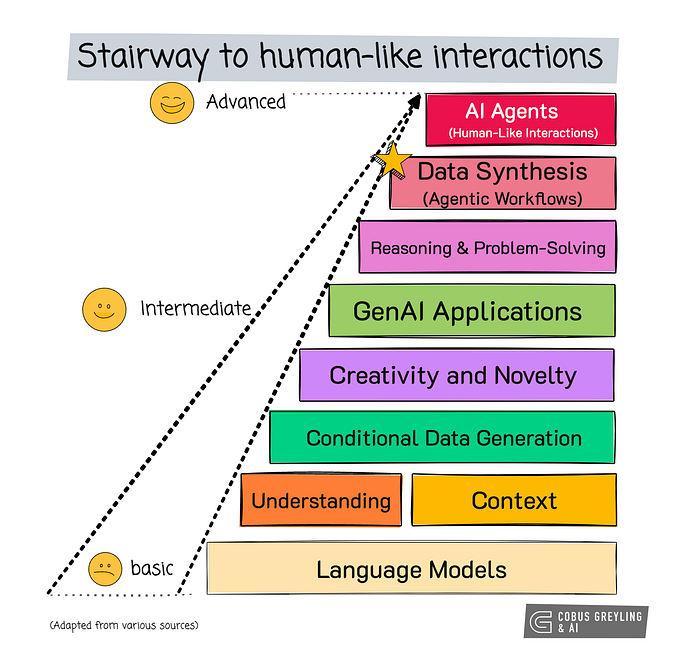

RAGs make LLMs useful.

Yes, before RAG, LLMs were simply toys. There weren’t many applications other than some trivial sentiment classification for LLM. This is primarily due to the LLM’s inability to learn things on the go. Anything real-time didn’t work with LLMs.

When RAGs came into practice, this changed.

RAGs allowed us to build apps with real-time data, and they helped us build intelligent apps around our private data with LLMs.

But if you ask anyone who builds RAGs what their tech stack is, you’ll listen to an old, broken tape recorder. The first few phases of all RAG pipelines are very similar, with few alternatives for core technologies.

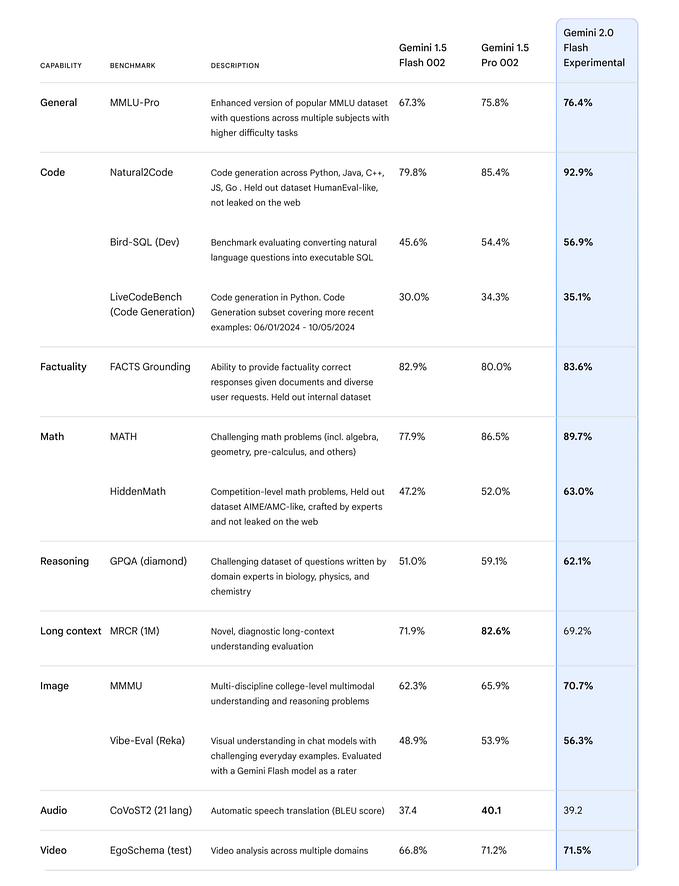

My go-to starter app has the following technologies: Langchain (with LlamaIndex being the only comparable alternative), ChromaDB, and OpenAI for both LLMs and embeddings. I often develop in a docker environment because the results are easy to reproduce in someone else’s computer (besides their many other benefits).

To my needs, I rarely package them. When I do, I either use Streamlit or Gradio. I used to work with Django before. It’s a…